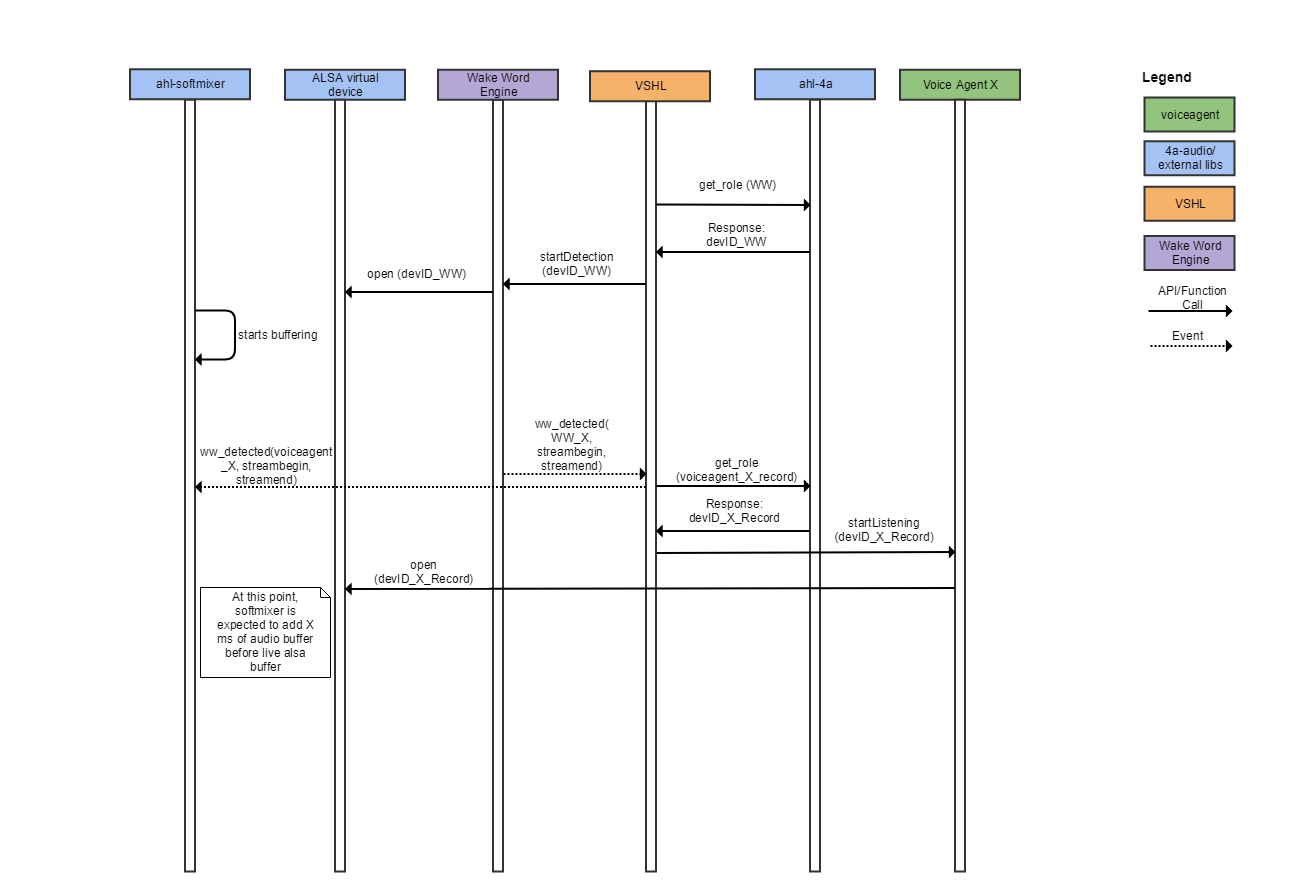

The diagram below summarizes discussion in AGL Santa Clara F2F (Sept 2018) about wakeword. Feasibility of this proposed flow has not yet been ascertained.

Some of the open questions:

- How do we ascertain control of buffer between voice agents to ensure voice agent X can access audio buffer only when it is supposed to (currently: startListening API sent by VSHL)

- Different voice agents may have different requirement about time of silence before speech for ASR calibration - we need configuration established for that

- Wakeword detection, caching, and voice agent ASR recognition are happening in 3 separate processes. How do we make sure all the 3 processes are in sync in terms of buffer position? For example, ahl-softmixer needs to know the exact wakeword position to make sure it is not included when the ASR recognition begins.

- How do we accommodate voice barge-in in this scenario?

- Do we need to accommodate the scenario if voice agent also needs access to wakeword uttered as a part of the cached buffer?

- Event subscription flow and other definitions need to be formalized

- We need to decide if it is safe for Wakeword engine to close audio buffer on wakeword detection without the risk of ahl-softmixer dropping audio packets

2 Comments

Naveen Bobbili

In my opinion, we should keep the voiceagent information out of wakeword service. Wakeword service should fire an event about which wakeword it has detected. This event will be consumed by vshl. Now vshl has a map of wakeword to voiceagent to voiceagent role for e.g. VoiceAgent_<VAID>_<Role NUM> (e.g. VoiceAgent_VA-001_0) role. vshl will request for the role from AHL. AHL will grant the role and vshl will signal the appropriate voiceagent to startlistening. When voiceagent starts listening, then softmixer should provide the X ms of buffer before the live ALSA buffer. After voiceagent finishes processing the audio, then it sends an event like endofspeech_detected. vshl will relinquish the role by informing the softmixer about it. After this any voiceagent will not be able to read the audio input. no other role will be active.

Another option is that VSHL will just signal startListening and the voiceagent is responsible for requesting the role. Helps if voiceagent is in separate process. But the activation and deactivation of role given to voiceagent is still responsibility of vshl.

Arijit Chattopadhyay

I agree that WW engine should not be aware of the voice agent. That was an oversight on my side and have updated the diagram.

To me, option 1 outlined by you aligns with the diagram above.

For option 2, making the voiceagent responsible for requesting the role may not save API calls (from AHL perspective) when VSHL security context is responsible for wakeword detection. Either the VSHL needs to request it, or the voiceagent. In either case, audio streaming cannot begin before AHL grants role.

Let me know your thoughts!